Introduction

Redis is an open-source, in-memory data store known for its high performance and wide range of supported data structures. It's commonly used for caching, real-time analytics, and various other applications requiring fast data retrieval. While Redis has unique features, it can also be configured as a Memcache, a simpler, high-speed in-memory caching system. In this Memcached-compatible mode, Redis focuses primarily on key-value storage, replicating Memcached's functionality but with the added advantages of data persistence and replication. This dual capability makes Redis an incredibly versatile tool, capable of saving both time and computational resources.

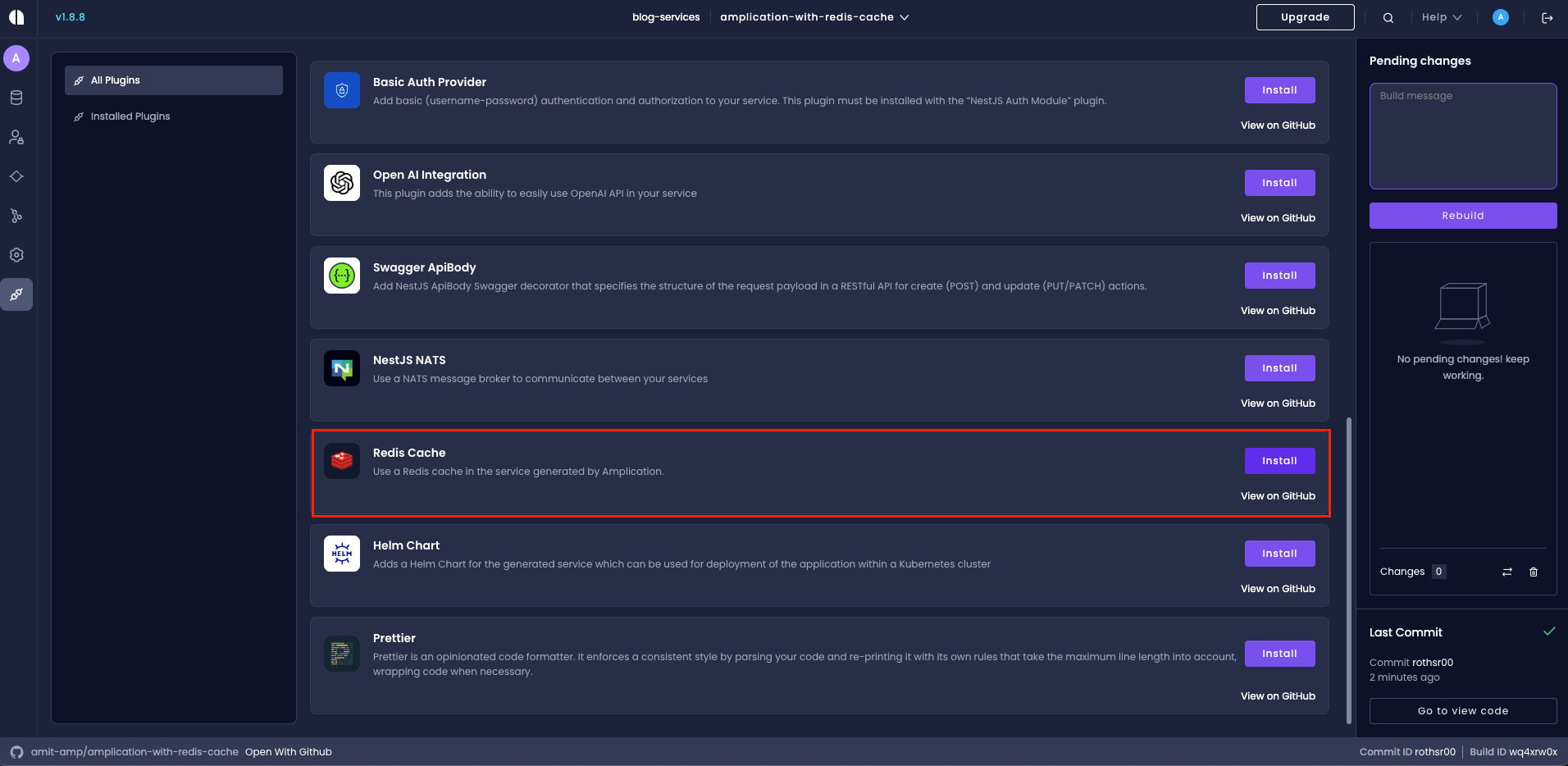

How to Install and Configure Redis in Your Amplication Project

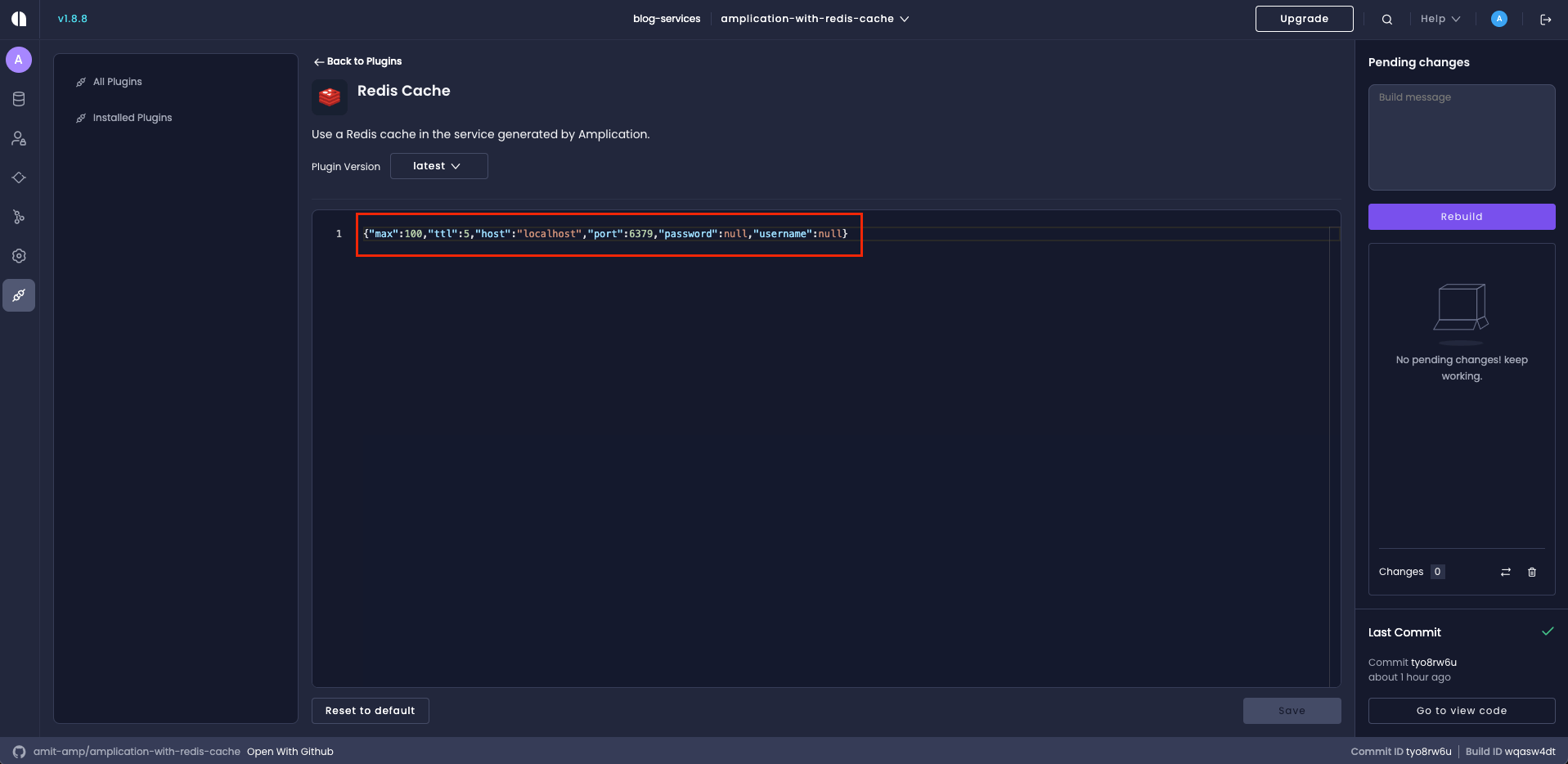

Setting up Redis caching in your Amplication project is remarkably straightforward. Start by creating a service within the Amplication platform. Once your service is set up, click on the 'Build' button to initiate the build process. Merge the generated Pull Request to move ahead. Now comes the magic—go back to Amplication and install the Redis Cache plugin! You can do this by navigating to the 'Plugins' section within your service sidebar menu, where you'll see a list of available plugins and installed plugins(see screenshot below for reference).

After installing the Redis plugin, simply click 'Build' once more. Amplication will generate a new PR, but this time it will include all the necessary configurations and dependencies needed for your service to start using Redis effectively.

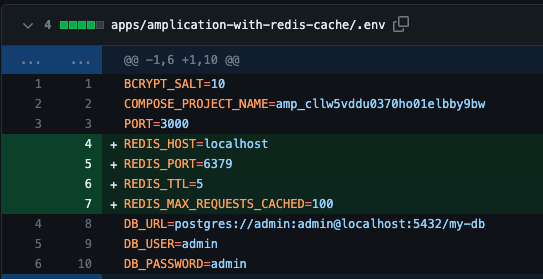

As you can see on this PR from our example repository, the Redis Cache plugin updates the following parts:

-

Adds the required environment variables to

.env.

REDIS_HOST and REDIS_PORT: these environment variables are used to configure the Redis service’s location.

REDIS_TTL: used to configure the Time-to-Live in seconds for each stored cache item.

REDIS_MAX_REQUESTS_CACHED: used to configure the maximum number of requests that can be cached

-

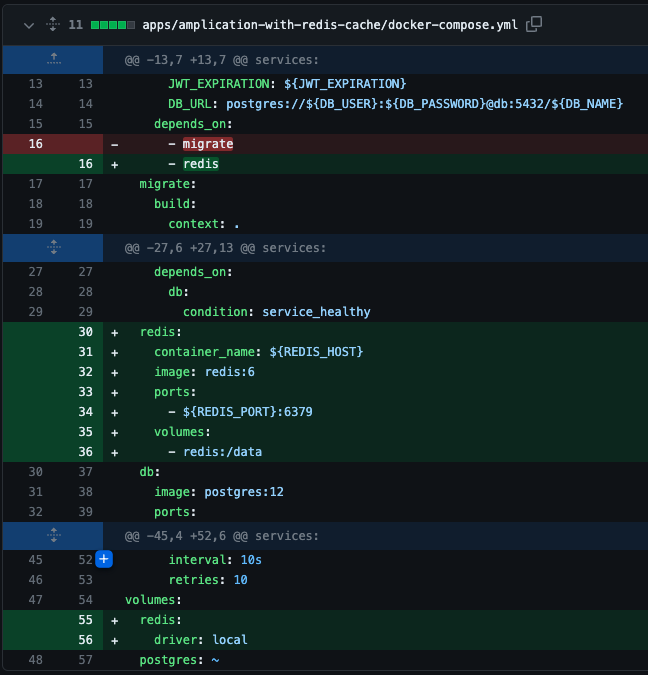

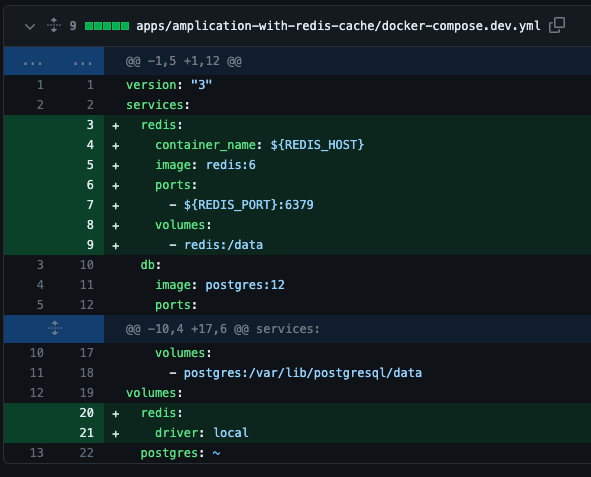

Adds the required service to the

docker-compose.ymlfile and thedocker-compose.dev.ymlfile.

..

The Docker Compose files are updated to include a new Redis service and a persistent volume. This ensures that a Redis instance runs in a Docker container and its data is stored persistently, simplifying the deployment process.

-

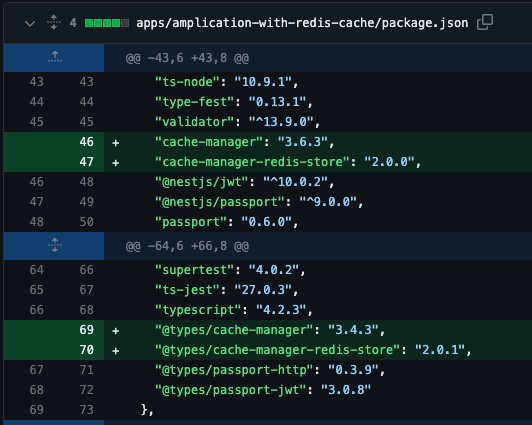

Adds the required dependencies to 'package.json'.

.These packages and their corresponding types packages are added to manage caching effectively. cache-manager is a multi-store caching library, and cache-manager-redis-store is its Redis adapter.

-

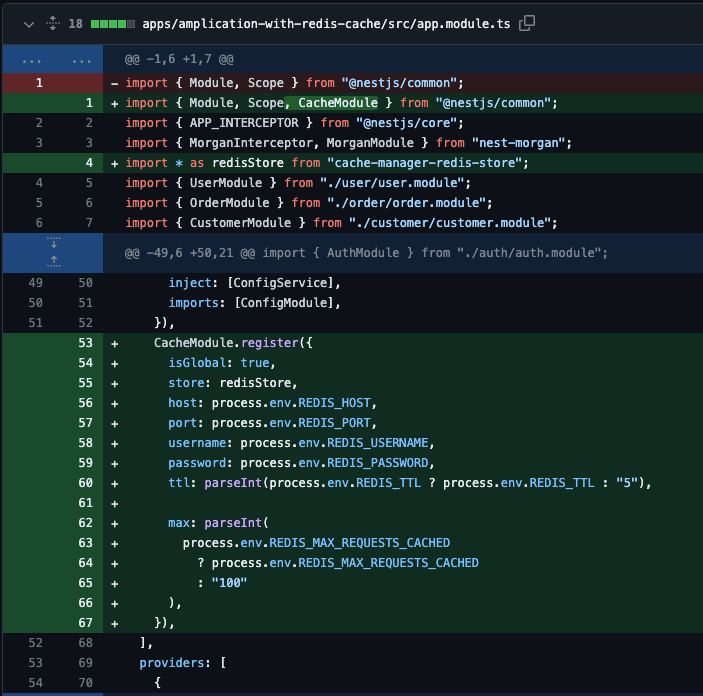

Adds the

CacheModuleconfigured to use Redis to theapp.module.tsmodule imports list.

.

CacheModule - this module abstracts caching logic, allowing you to switch between various cache stores effortlessly.

CacheModule Configuration:

- isGlobal: true - makes the cache module global, meaning it can be injected and used across your entire application without needing to re-import the module in other modules.

- store: redisStore - specifies that Redis is the underlying cache store.

- host, port, username, password - these are read from the environment variables, providing flexibility for different deployment scenarios.

- ttl - Time-to-Live (in seconds) is parsed from the

REDIS_TTLenvironment variable. It sets the expiration time for each cache entry. In version 5 of the cache-module the ttl is in milliseconds. - max - The maximum number of cache entries is determined by

REDIS_MAX_REQUESTS_CACHED. It sets an upper limit to how many requests can be cached to avoid memory issues.

The host, port, username, password, ttl and max properties can be configured via the Redis Cache plugin settings

Putting It into Practice: Add Custom code Using Redis Cache

In this section, we'll delve into the real-world implementation of caching within the architecture of an e-commerce application, specifically focusing on optimizing customer data retrieval. E-commerce platforms frequently access customer data, but updates to this data are comparatively less frequent. Thus, caching emerges as a compelling solution to enhance application performance and reduce strain on the database.

There are two strategies for using the Redis cache in a NestJS application:

- Injecting the cache manager into a service

- Auto-Caching response at the controller level

Injecting the cache manager into a service

In this approach, you write the logic to check the cache before querying the database, and you're also responsible for setting new cache entries when new data is fetched. This "manual" management gives you a high level of control over what data gets cached, for how long, and under what circumstances the cache should be invalidated or updated.

Import Required Modules:

Before we proceed with the implementation, ensure that the following modules are imported into your service file, customer.service.ts:

import { Injectable, Inject, CACHE_MANAGER } from "@nestjs/common";

import { Cache } from "cache-manager";

Dependency Injection: Integrate Cache Manager:

Inject the CacheManager into your service by adding it to the constructor. Update your customer.service.ts as follows:

-import { Injectable } from "@nestjs/common";

+import { Injectable, Inject, CACHE_MANAGER } from "@nestjs/common";

import { PrismaService } from "../prisma/prisma.service";

import { CustomerServiceBase } from "./base/customer.service.base";

+import { Cache } from "cache-manager";

@Injectable()

export class CustomerService extends CustomerServiceBase {

constructor(

+@Inject(CACHE_MANAGER) private readonly cache: Cache,

protected readonly prisma: PrismaService

) {

super(prisma);

}

Implementing Cache Logic:

To put caching into action, add a new method called findCustomerById. This method adheres to the following workflow:

-

Check Cache First:

Before going to the database, we first check if the customer details are already available in the cache. We use a unique key like

customer_${customerId}for each customer. -

Fetch from Database if Not Cached:

If the details are not in the cache, we fetch them from the database using the existing

customerRepositorymethod. -

Cache the Result:

Once we have the customer details, we cache them using the

setmethod from theCacheManager. We use the Time-to-Live (TTL) value from the.envfile to set the expiration time.

async findCustomerById(customerId: string): Promise<Customer | null> {

console.log("Checking cache for customer");

const cachedCustomer = await this.cache.get(`customer_${customerId}`);

if (cachedCustomer && typeof cachedCustomer === "string") {

console.log("Found customer in cache");

return JSON.parse(cachedCustomer);

}

console.log("Fetching customer from database");

const customer = await this.prisma.customer.findUnique({

where: { id: customerId },

});

console.log("Setting customer in cache");

await this.cache.set(`customer_${customerId}`, JSON.stringify(customer), {

ttl: parseInt(process.env.REDIS_TTL || "5"),

});

return customer;

}

Controller Integration:

Finally, integrate the findCustomerById method into your customer.controller.ts:

@common.UseInterceptors(AclFilterResponseInterceptor)

@common.Get("/:id")

@swagger.ApiOkResponse({ type: Customer })

@swagger.ApiNotFoundResponse({ type: errors.NotFoundException })

@nestAccessControl.UseRoles({

resource: "Customer",

action: "read",

possession: "own",

})

@swagger.ApiForbiddenResponse({

type: errors.ForbiddenException,

})

async findCustomerById(

@common.Param() params: CustomerWhereUniqueInput

): Promise<Customer | null> {

const result = await this.service.findCustomerById(params.id);

if (result === null) {

throw new errors.NotFoundException(

`No resource was found for ${JSON.stringify(params)}`

);

}

return result;

}

Observing the Results:

When the endpoint /api/customers/:id is invoked for the first time, the logs will indicate:

Checking cache for customer

Fetching customer from database

Setting customer in cache

"GET /api/customers/cllwb33lw0000p5db1a1c2e91 HTTP/1.1" 200

For subsequent invocations (during the TTL) the logs will display:

Checking cache for customer

Found customer in cache

"GET /api/customers/cllwb33lw0000p5db1a1c2e91 HTTP/1.1" 200

It’s important to mention that I have extended the TTL setting to 3600 seconds to prolong the cache duration. Be caution when opting for a low TTL as you may miss out on retrieving the cache data due to expiration.

Auto-Caching response at the controller level

This method is best suited for straightforward use-cases where caching can be applied uniformly across endpoints. It simplifies the implementation by abstracting away the manual management of the cache, while also providing customization options like setting unique cache keys or adjusting the Time-to-Live (TTL).

For this example, we'll implement the auto-cacheing response to the GET /api/customers endpoint by adding the**findManyCustomers** method in the customer.controller.ts file and following these steps:

Adding the Cache Interceptor:

Include the CacheInterceptor within the UseInterceptors decorator:

@common.UseInterceptors(common.CacheInterceptor)

Configuring Cache Time-to-Live (TTL):

The default TTL is set to 5 seconds. However, if you wish to override this value, utilize the CacheTTL decorator:

@common.CacheTTL(CUSTOM_VALUE)

Customizing the Cache Key:

The interceptor auto-generates a cache key, but you can customize it using the CacheKey decorator:

@common.CacheKey(CUSTOM_KEY)

Please note that the @UseInterceptors decorator designed for handling GET requests in RESTful services and it’s not currently compatible with GraphQL resolvers. In essence, the CacheInterceptor decorator can be applied to any RESTful GET endpoint, and same for the @CacheTTL() and @CacheKey().

Complete Example:

Here's how you can wrap everything together:

@common.UseInterceptors(AclFilterResponseInterceptor, common.CacheInterceptor) // add the cache interceptor

@common.CacheKey("customers") // override the autogenerated cache key

@common.CacheTTL(1800) // override default TTL

@common.Get()

@swagger.ApiOkResponse({ type: [Customer] })

@ApiNestedQuery(CustomerFindManyArgs)

@nestAccessControl.UseRoles({

resource: "Customer",

action: "read",

possession: "any",

})

@swagger.ApiForbiddenResponse({

type: errors.ForbiddenException,

})

async findManyCustomers(@common.Req() request: Request): Promise<Customer[]> {

console.log("Calling findManyCustomers");

const args = plainToClass(CustomerFindManyArgs, request.query);

return this.service.findMany({

...args,

select: {

address: {

select: {

id: true,

},

},

createdAt: false,

email: true,

firstName: true,

id: true,

lastName: true,

phone: true,

updatedAt: false,

},

});

}

Log Behavior:

Upon the first invocation of the /api/customers endpoint, the logs will display:

Calling findManyCustomers <= this log from the controller body

GET /api/customers HTTP/1.1" 200

For all subsequent calls within the TTL window, this log entry won't appear, indicating that the data is being served from the cache.

Last words

Amplication's Redis Cache Plugin offers a powerful yet effortless way to enhance your application's performance. With its straightforward setup and integration, you can unlock the benefits of caching in no time. This streamlined process makes Amplication an invaluable tool for developers aiming for optimized application performance, reducing the time and complexity traditionally involved in setting up Redis caching.